SPDX-License-Identifier: Apache-2.0

Copyright (c) 2020 Intel Corporation

A newer version of this document exists.

This document applies to experience kits deployed using Ansible.

See the updated Telemetry documentation for kits that use the Edge Software Provisioner for deployment, such as the Developer Experience Kit.

Telemetry Support in Smart Edge Open

Overview

Smart Edge Open supports platform and application telemetry with the aid of multiple telemetry projects. This support allows users to retrieve information about the platform, the underlying hardware, cluster, and applications deployed. The data gathered by telemetry can be used to visualize metrics and resource consumption, set up alerts for certain events, and aid in making scheduling decisions based on the received telemetry. With the telemetry data at a user’s disposal, a mechanism is also provided to schedule workloads based on the data available.

Currently, the support for telemetry is focused on metrics; support for application tracing telemetry is planned in the future.

Architecture

The telemetry components used in Smart Edge Open are deployed from the Edge Controller as Kubernetes* (K8s) pods. Components for telemetry support include:

- collectors

- metric aggregators

- schedulers

- monitoring and visualization tools

Depending on the role of the component, it is deployed as either a Deployment or Deamonset. Generally, global components receiving inputs from local collectors are deployed as a Deployment type with a single replica set, whereas local collectors running on each host are deployed as Daemonsets. Monitoring and visualization components such as Prometheus* and Grafana* along with TAS (Telemetry Aware Scheduler) are deployed on the Edge Controller while other components are generally deployed on Edge Nodes. Local collectors running on Edge Nodes that collect platform metrics are deployed as privileged containers. Communication between telemetry components is secured with TLS either using native TLS support for a given feature or using a reverse proxy running in a pod as a container. All the components are deployed as Helm charts.

Flavors and configuration

The deployment of telemetry components in Smart Edge Open is easily configurable from the Converged Edge Experience Kits (CEEK). The deployment of the Grafana dashboard and PCM (Performance Counter Monitoring) collector is optional (telemetry_grafana_enable enabled by default, telemetry_pcm_enable disabled by default). There are four distinctive flavors for the deployment of the CollectD collector, enabling the respective set of plugins (telemetry_flavor):

- common (default)

- flexran

- smartcity

- corenetwork

Further information on what plugins each flavor enables can be found in the CollectD section. All flags can be changed in ./inventory/default/group_vars/all/10-open.yml for the default configuration or in ./flavors in a configuration for a specific platform flavor.

Telemetry features

This section provides an overview of each of the components supported within Smart Edge Open and a description of how to use these features.

Prometheus

Prometheus is an open-source, community-driven toolkit for systems monitoring and alerting. The main features include:

- PromQL query language

- multi-dimensional, time-series data model

- support for dashboards and graphs

The main idea behind Prometheus is that it defines a unified metrics data format that can be hosted as part of any application that incorporates a simple web server. The data can be then scraped (downloaded) and processed by Prometheus using a simple HTTP/HTTPS connection.

In Smart Edge Open, Prometheus is deployed as a K8s Deployment with a single pod/replica on the Edge Controller node. It is configured out of the box to scrape all other telemetry endpoints/collectors enabled in Smart Edge Open and gather data from them. Prometheus is enabled in the CEEK by default with the telemetry/prometheus role.

Usage

-

To connect to a Prometheus dashboard, start a browser on the same network as the Smart Edge Open cluster and enter the address of the dashboard (where the IP address is the address of the Edge Controller)

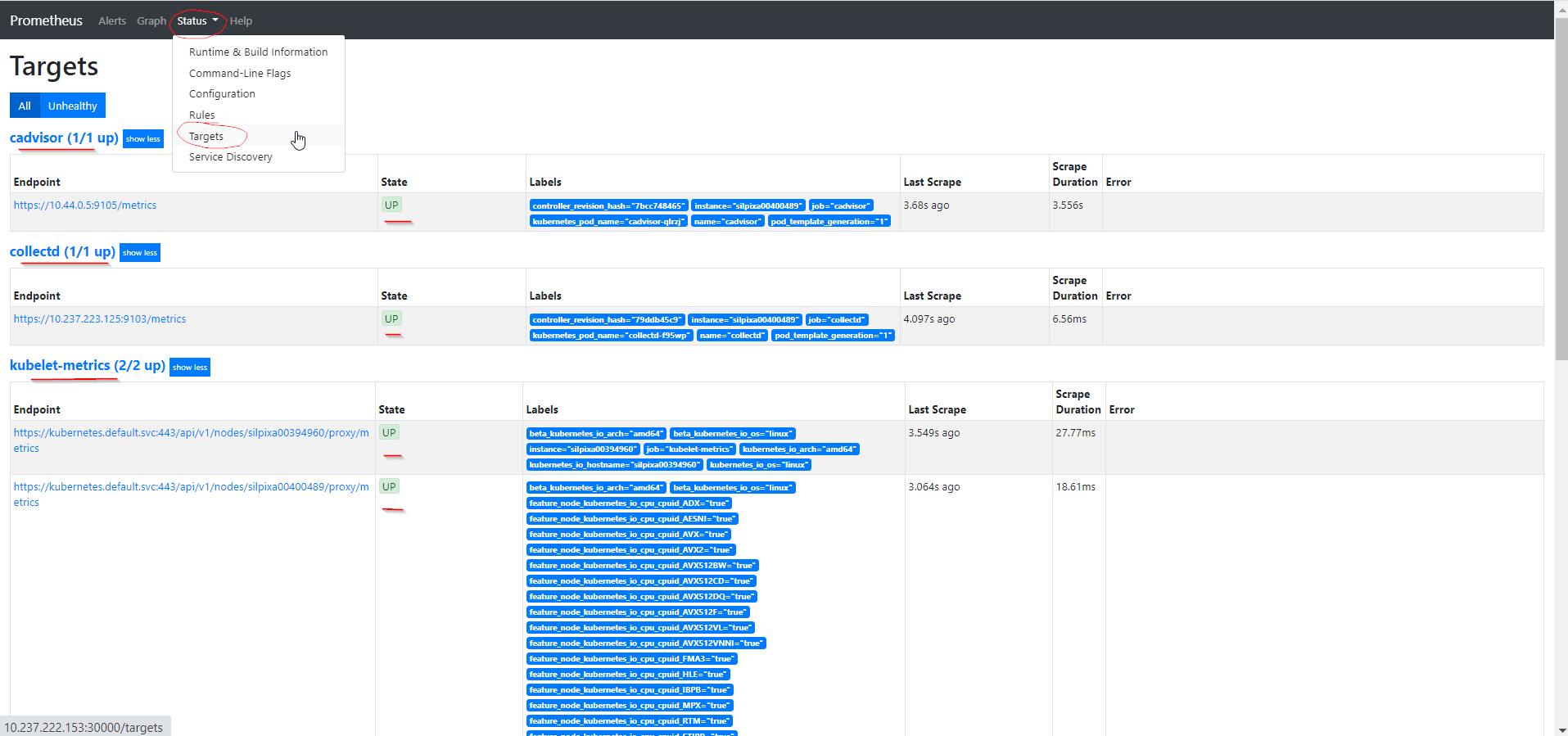

From browser: http://<controller-ip>:30000 - To list the targets/endpoints currently scraped by Prometheus, navigate to the

statustab and selecttargetsfrom the drop-down menu.

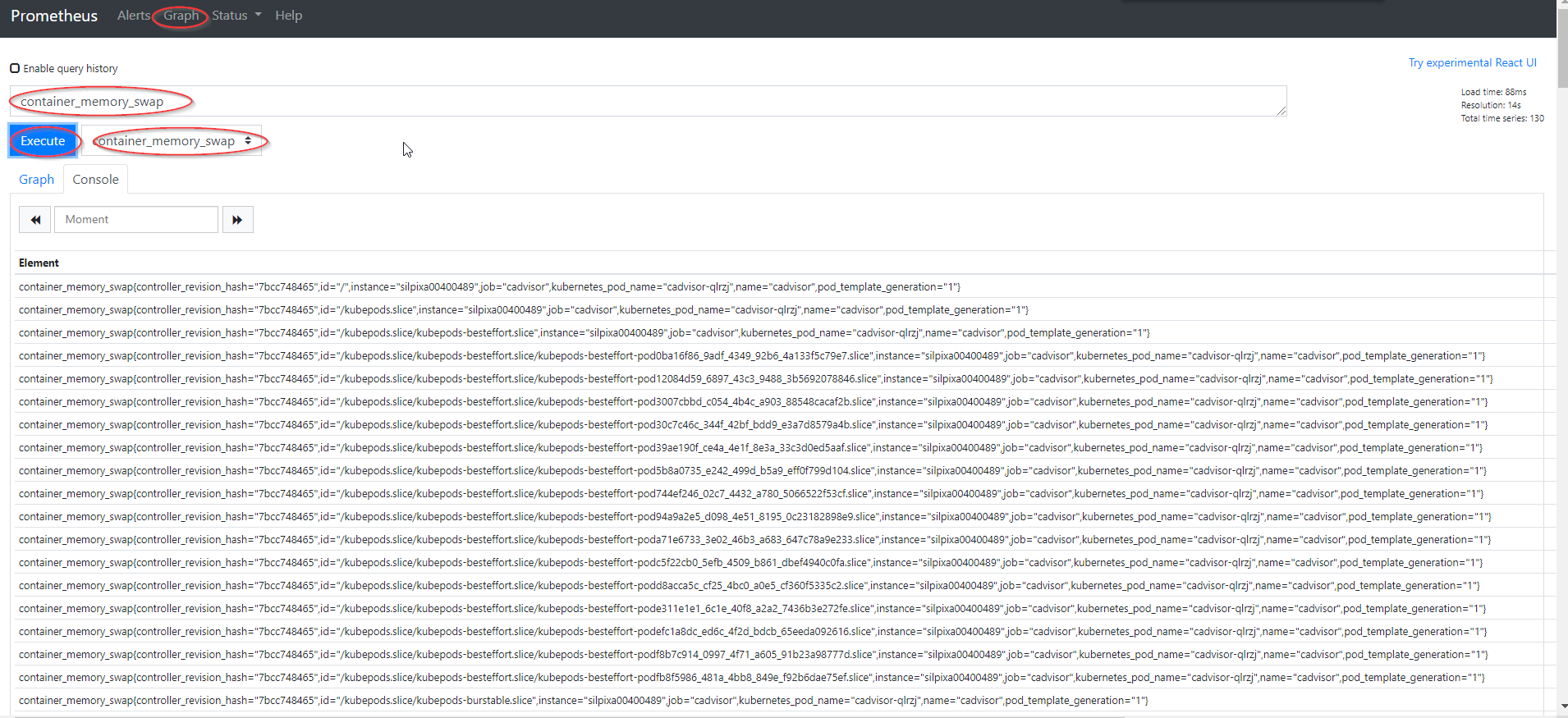

- To query a specific metric from one of the collectors, navigate to the

graphtab, select a metric from theinsert-metric-at-cursorlist or type the value into the field above and pressexecute.

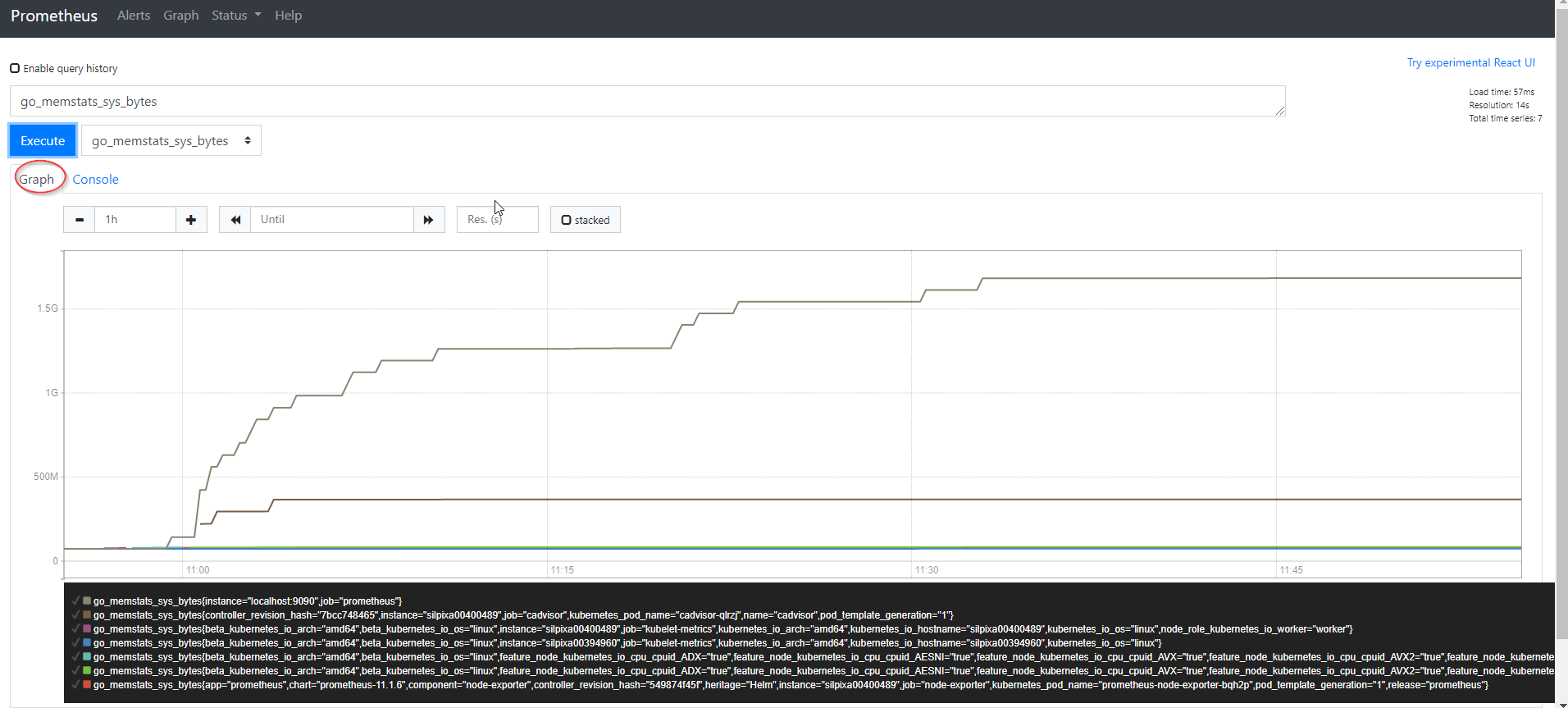

- To graph the selected metrics, press the

graphtab.

Grafana

Grafana is an open-source visualization and analytics software. It takes the data provided from external sources and displays relevant data to the user via dashboards. It enables the user to create customized dashboards based on the information the user wants to monitor and allows for the provision of additional data sources. In Smart Edge Open, the Grafana pod is deployed on a control plane as a K8s Deployment type and is by default provisioned with data from Prometheus. It is enabled by default in CEEK and can be enabled/disabled by changing the telemetry_grafana_enable flag.

Usage

-

To connect to the Grafana dashboard, start a browser on the same network as the Smart Edge Open cluster and enter the address of the dashboard (where the IP address is the address of the Edge Controller)

From browser: http://<controller-ip>:32000 - Access the dashboard

- Extract grafana password by running the following command on Kubernetes controller:

kubectl get secrets/grafana -n telemetry -o json | jq -r '.data."admin-password"' | base64 -d - Log in to the dashboard using the password from the previous step and

adminlogin

- Extract grafana password by running the following command on Kubernetes controller:

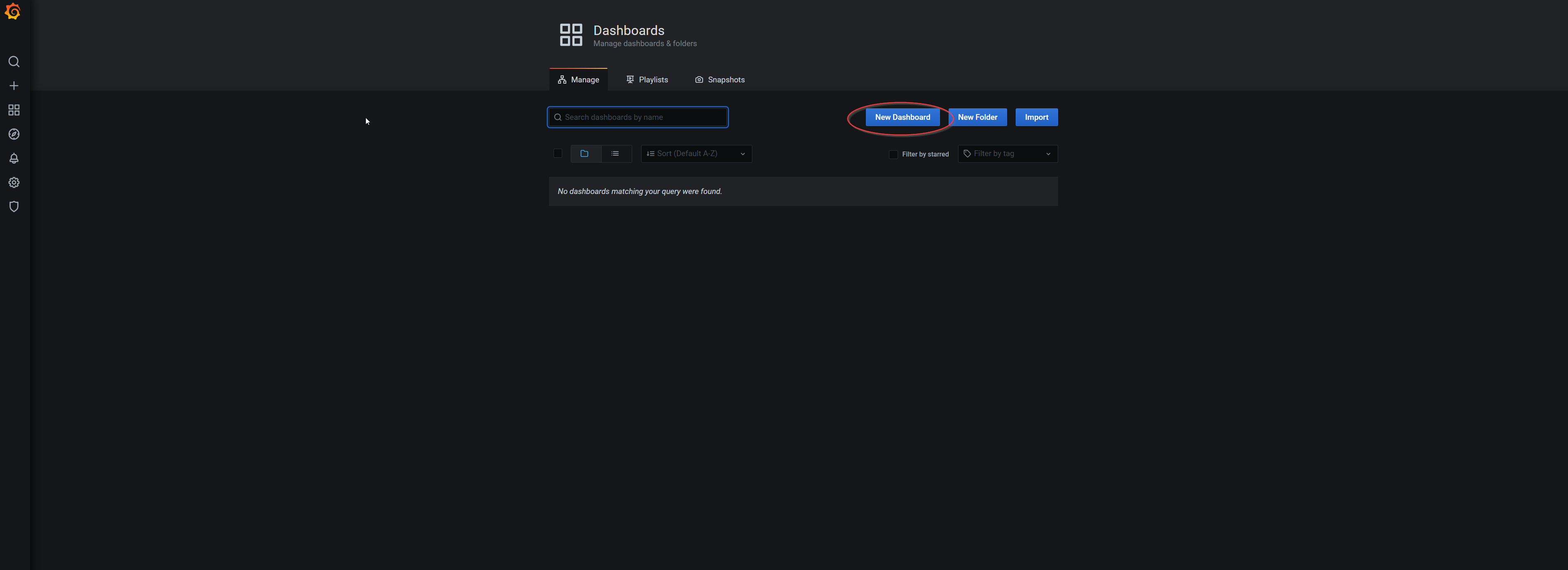

- To create a new dashboard, navigate to

http://<controller-ip>:32000/dashboards.

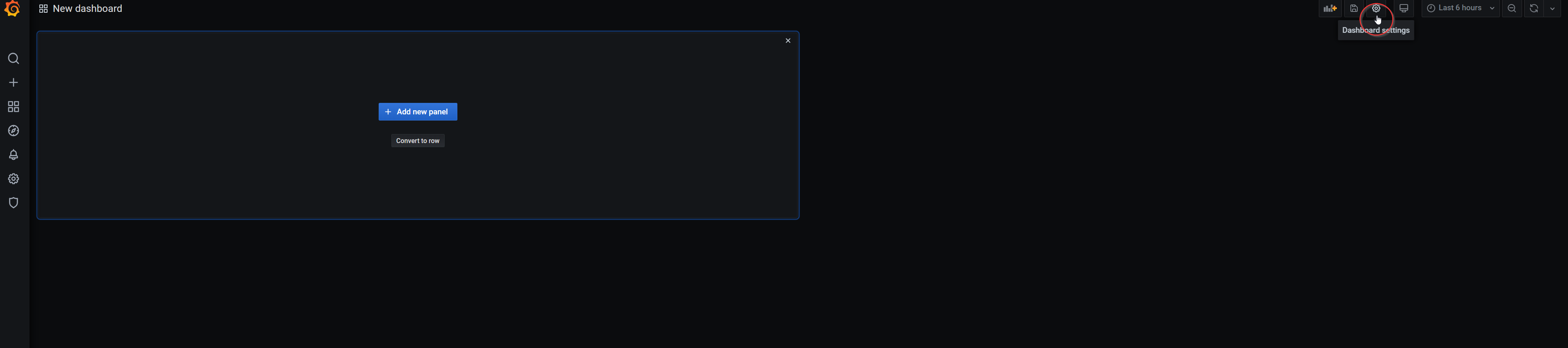

- Navigate to dashboard settings.

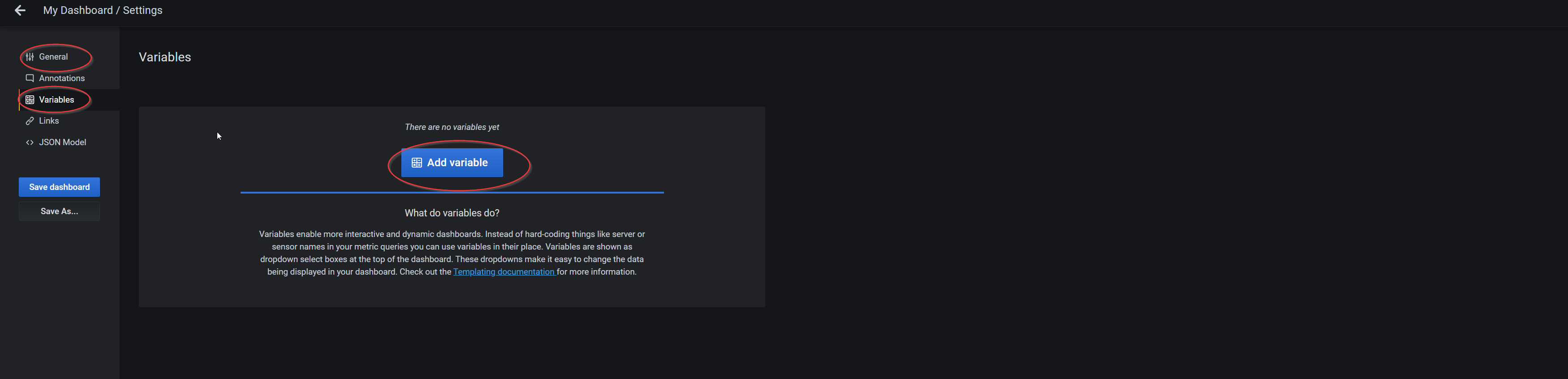

-

Change the name of dashboard in the

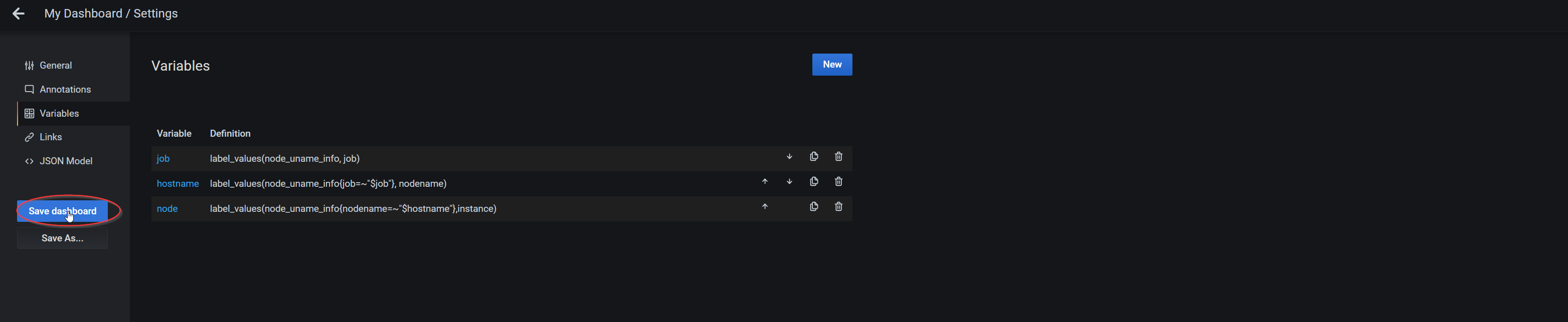

General taband add new variables per the following table from theVariables tab: | Field | variable 1 | variable 2 | variable 3 |

|——————|:———————————-:|:—————————————————-:|:————————————————————-:|

| Name | job | hostname | node |

| Label | JOB | Host | IP |

| Data source | Prometheus TLS | Prometheus TLS | Prometheus TLS |

| Query | label_values(node_uname_info, job) | label_values(node_uname_info{job=~”$job”}, nodename) | label_values(node_uname_info{nodename=~”$hostname”},instance) |

| Sort | Alphabetical(asc) | Disabled | Alphabetical(asc) |

| Multi-value | | Enable | |

| Include All opt. | | Enable | Enable |

| Field | variable 1 | variable 2 | variable 3 |

|——————|:———————————-:|:—————————————————-:|:————————————————————-:|

| Name | job | hostname | node |

| Label | JOB | Host | IP |

| Data source | Prometheus TLS | Prometheus TLS | Prometheus TLS |

| Query | label_values(node_uname_info, job) | label_values(node_uname_info{job=~”$job”}, nodename) | label_values(node_uname_info{nodename=~”$hostname”},instance) |

| Sort | Alphabetical(asc) | Disabled | Alphabetical(asc) |

| Multi-value | | Enable | |

| Include All opt. | | Enable | Enable | - Save the dashboard.

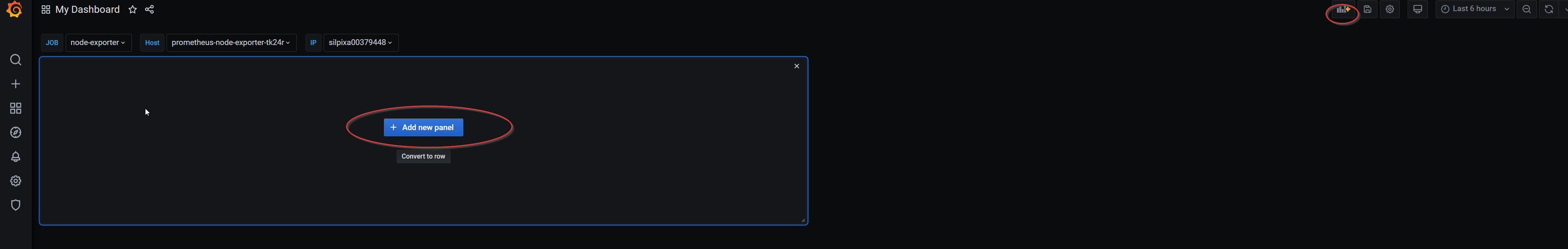

- Go to the newly created dashboard and add a panel.

-

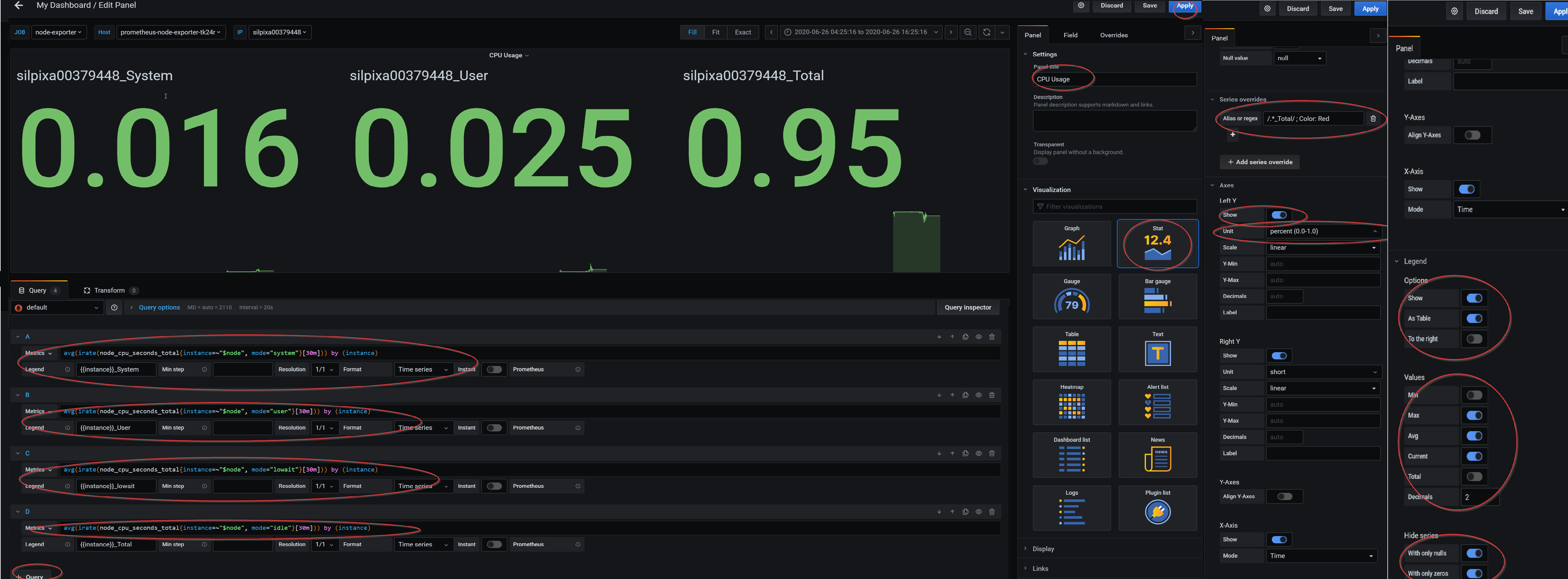

Configure the panel per the configuration below and press apply.

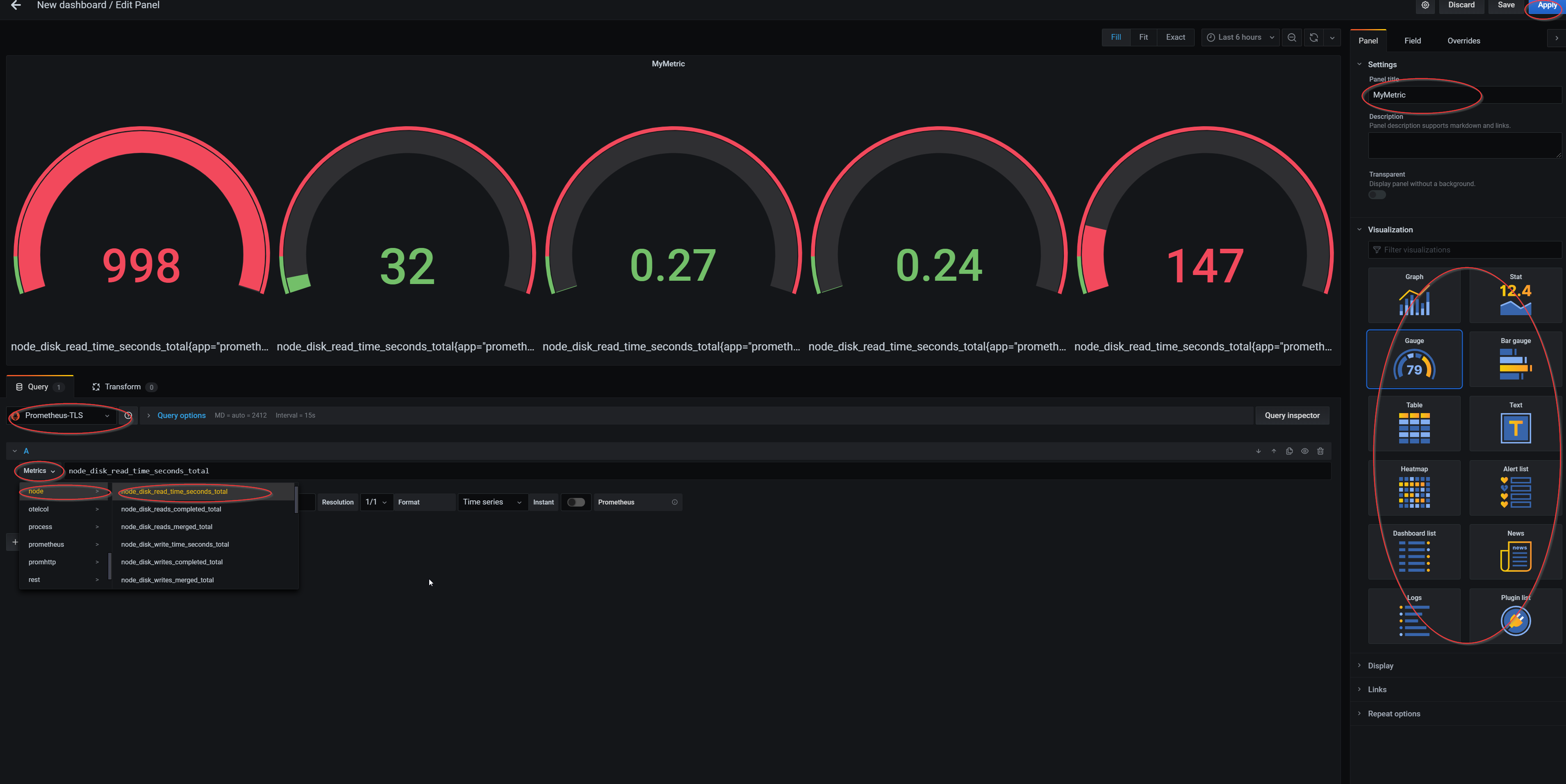

- To query a specific metric in the panel, select a data source (Prometheus-TLS) and pick a metric needed to be monitored. From the visualization tab, pick the desired style to display the metric. Give the panel a name and press apply in the upper right corner.

Prometheus’s metrics can be queried using PromQL language. Queried metrics can be then processed using PromQL’s functions or Grafana’s transformations.

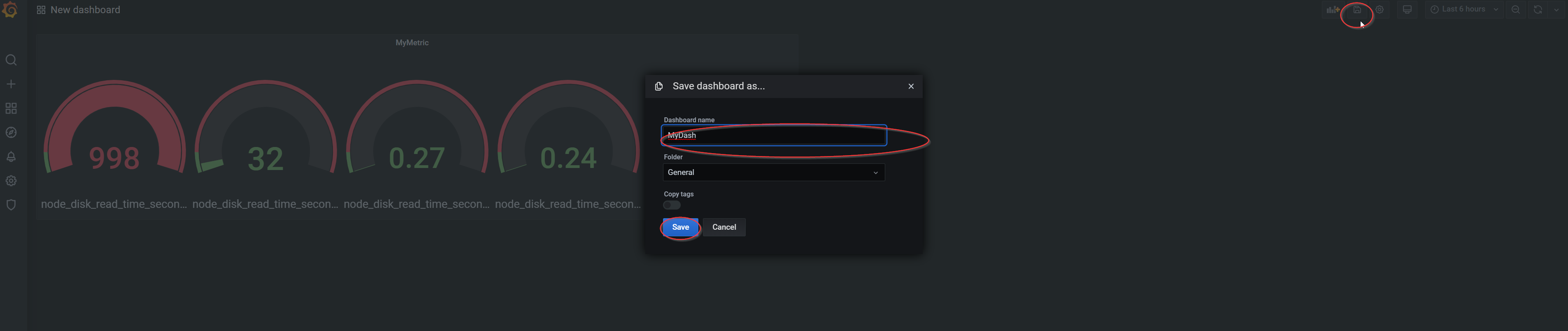

- To save the dashboard, click save in the upper right corner.

Smart Edge Open will deploy Grafana with a simple

Example dashboard. You can use this dashboard to learn how to use Grafana and create your own dashboards.

Node Exporter

Node Exporter is a Prometheus exporter that exposes hardware and OS metrics of *NIX kernels. The metrics are gathered within the kernel and exposed on a web server so they can be scraped by Prometheus. In Smart Edge Open, the Node Exporter pod is deployed as a K8s Daemonset; it is a privileged pod that runs on every Edge Node in the cluster. It is enabled by default by CEEK.

Usage

- To access metrics available from the Node Exporter, connect to the Prometheus dashboard.

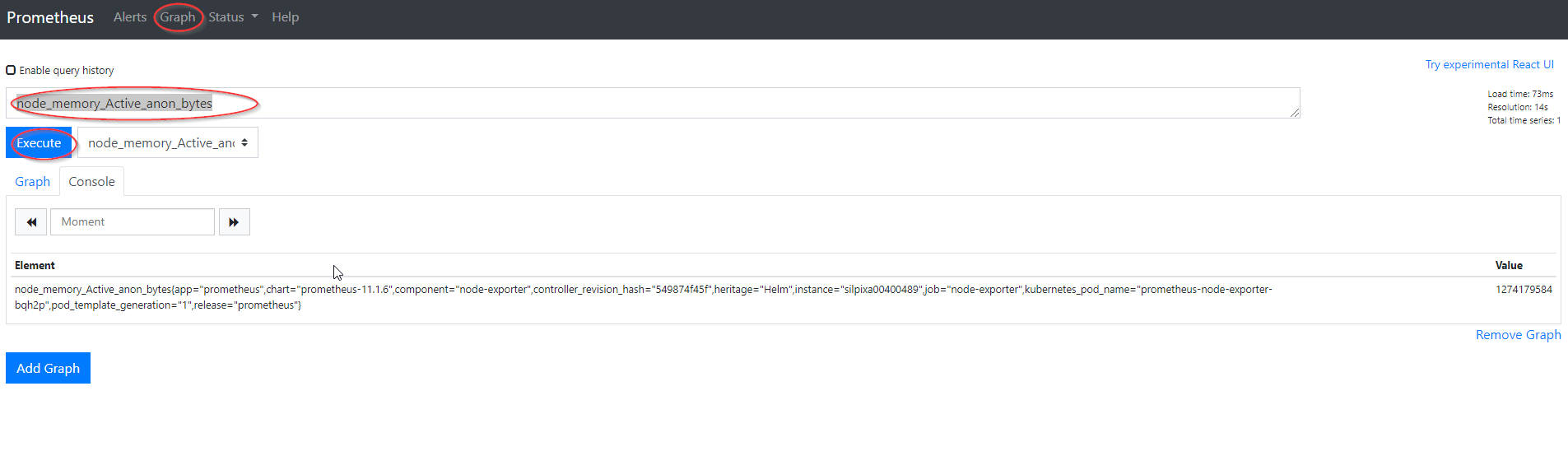

- Look up an example Node Exporter metric by specifying the metric name (i.e.,

node_memory_Active_anon_bytes) and pressingexecuteunder thegraphtab.

VCAC-A

Node Exporter also enables exposure of telemetry from Intel’s VCAC-A card to Prometheus. The telemetry from the VCAC-A card is saved into a text file; this text file is used as an input to the Node Exporter. More information on VCAC-A usage in Smart Edge Open is available here.

cAdvisor

The cAdvisor is a running daemon that provides information about containers running in the cluster.

It collects and aggregates data about running containers such as resource usage, isolation parameters, and network statistics. Once the data is processed, it is exported. The data can be easily obtained by metrics monitoring tools such as Prometheus. In Smart Edge Open, cAdvisor is deployed as a K8s daemonset on every Edge Node and scraped by Prometheus.

Usage

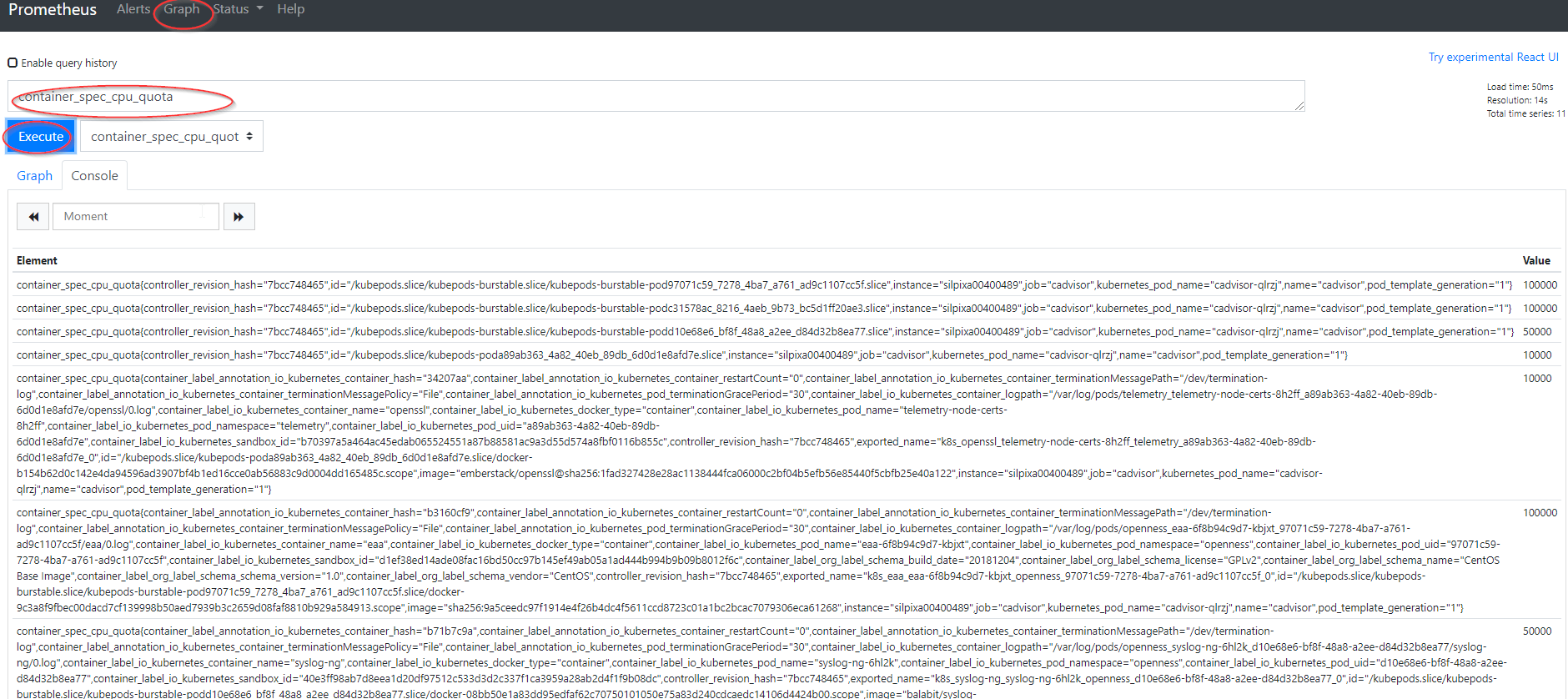

- To access metrics available from cAdvisor, connect to the Prometheus dashboard.

- Look up an example cAdvisor metric by specifying the metric name (i.e.,

container_spec_cpu_quota) and pressingexecuteunder thegraphtab.

CollectD

CollectD is a daemon/collector enabling the collection of hardware metrics from computers and network equipment. It provides support for CollectD plugins, which extends its functionality for specific metrics collection such as Intel® RDT, Intel PMU, and ovs-dpdk. The metrics collected are easily exposed to the Prometheus monitoring tool via the usage of the write_prometheus plugin. In Smart Edge Open, CollectD is supported with the help of the OPNFV Barometer project - using its Docker image and available plugins. As part of the Smart Edge Open release, a CollectD plugin for Intel® FPGA Programmable Acceleration Card (Intel® FPGA PAC) N3000 telemetry is now available from Smart Edge Open (power and temperature telemetry). In Smart Edge Open, the CollectD pod is deployed as a K8s Daemonset on every available Edge Node, and it is deployed as a privileged container.

Plugins

There are four distinct sets of plugins (flavors) enabled for CollectD deployment that can be used depending on the use-case/workload being deployed on Smart Edge Open. Common is the default flavor in Smart Edge Open. The flavors available are: common, corenetwork, flexran, and smartcity. Below is a table specifying which CollectD plugins are enabled for each flavor.

The various CEEK flavors are enabled for CollectD deployment as follows:

| Common | Core Network | FlexRAN | SmartCity |

|---|---|---|---|

| cpu | cpu | cpu | cpu |

| cpufreq | cpufreq | cpufreq | cpufreq |

| load | load | load | load |

| hugepages | hugepages | hugepages | hugepages |

| intel_pmu | intel_pmu | intel_pmu | intel_pmu |

| intel_rdt | intel_rdt | intel_rdt | intel_rdt |

| ipmi | ipmi | ipmi | ipmi |

| write_prometheus | write_prometheus | write_prometheus | write_prometheus |

| ovs_pmd_stats | ovs_stats | ovs_pmd_stat | |

| ovs_stats | fpga_telemetry |

Usage

-

Select the flavor for the deployment of CollectD from the CEEK during Smart Edge Open deployment; the flavor is to be selected with

telemetry_flavor: <flavor name>.In the event of using the

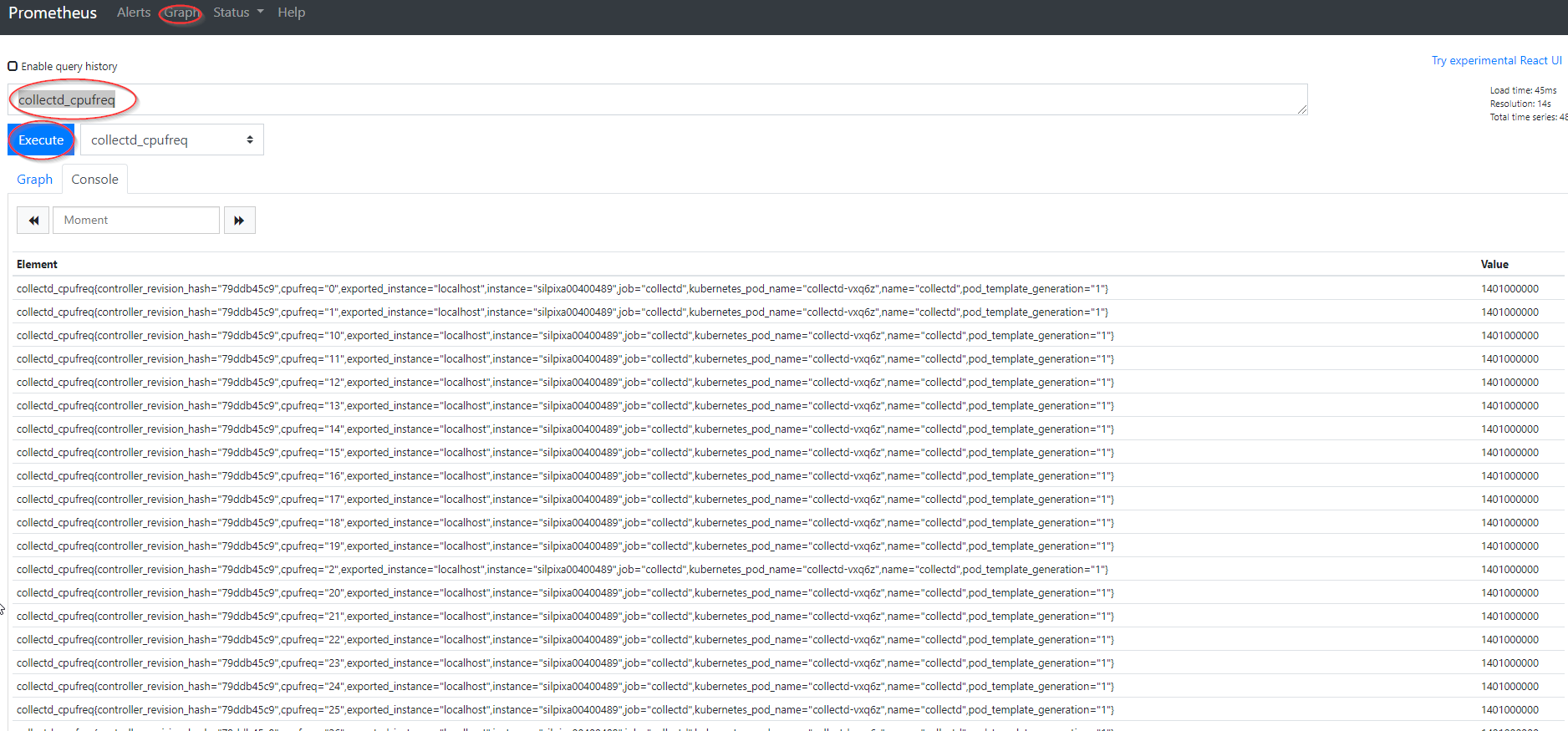

flexranprofile,OPAE_SDK_1.3.7-5_el7.zipneeds to be available in./ido-converged-edge-experience-kits/ceek/opae_fpgadirectory; for details about the packages, see FPGA support in Smart Edge Open - To access metrics available from CollectD, connect to the Prometheus dashboard.

- Look up an example the CollectD metric by specifying the metric name (ie.

collectd_cpufreq) and pressingexecuteunder thegraphtab.

OpenTelemetry

OpenTelemetry is a project providing APIs and libraries that enable the collection of telemetry from edge services and network functions. This project provides a mechanism to capture application metrics and traces within applications with support for multiple programming languages. The captured metrics are exposed to OpenTelemetry agents and collectors responsible for the handling of data and forwarding it to the desired back-end application(s) for processing and analysis. Among the supported back-ends are popular projects such as Prometheus (metrics), Jaeger, and Zipkin (traces). A concept of receiver and exporter exists within the Open Telemetry agent and collector. The receiver provides a mechanism to receive the data into the agent or collector. Multiple receivers are supported and at least one or more must be configured. On the opposite end, an exporter provides a mechanism to export data to the receiver, similarly multiple exporters are supported and one or more must be set up.

As part of the Smart Edge Open release, only the support for collecting metrics and exposing them to Prometheus is enabled. The deployment model chosen for Open Telemetry is to deploy a global collector pod receiving data from multiple agents, the agents are to be deployed as side-car containers for each instance of the application pod.

- The application exports data to the agent locally.

- The agent receives the data and exports it to the collector by specifying the collector’s K8s service name and port via TLS secured connection.

- The collector exposes all the available data received from multiple agents on an endpoint which is being scraped by Prometheus.

OpenCensus exporter/receiver is used in the default Smart Edge Open configuration for agent and collector to collect metrics from an application. A sample application pod generating random numbers, aggregating those numbers and exposing the metrics, and containing a reference side-car OpenTelemetry agent is available in the Edge Apps repository.

Usage

- Pull the Edge Apps repository.

-

Build the sample telemetry application Docker image and push to the local Harbor registry from the Edge Apps repo.

cd edgeapps/applications/telemetry-sample-app/image/ ./build.sh push <harbor_registry_ip> <port> -

Create a secret using a root-ca created as part of CEEK telemetry deployment (this will authorize against the Collector certificates).

cd edgeapps/applications/telemetry-sample-app/ ./create-secret.sh -

Configure and deploy the sample telemetry application with the side-car OpenTelemetry agent from the Edge Apps repo using Helm. Edit

edgeapps/applications/telemetry-sample-app/opentelemetry-agent/values.yaml, and changeapp:image:repository: 10.0.0.1:30003/intel/metricappto the IP address of the Harbor registry.cd edgeapps/applications/telemetry-sample-app/ helm install otel-agent opentelemetry-agent -

Check that the app/agent pod is deployed:

kubectl get pods otel-agent-sidecar NAME READY STATUS RESTARTS AGE otel-agent-sidecar 2/2 Running 0 2m26s - Open your browser and go to

http://<controller_IP>:30000/graph. - Input

opentelemetry_example_random_number_bucketinto the query field and click execute. - Click the

Graphbutton and check if the graph is plotted (the quantity of numbers in buckets should increase).

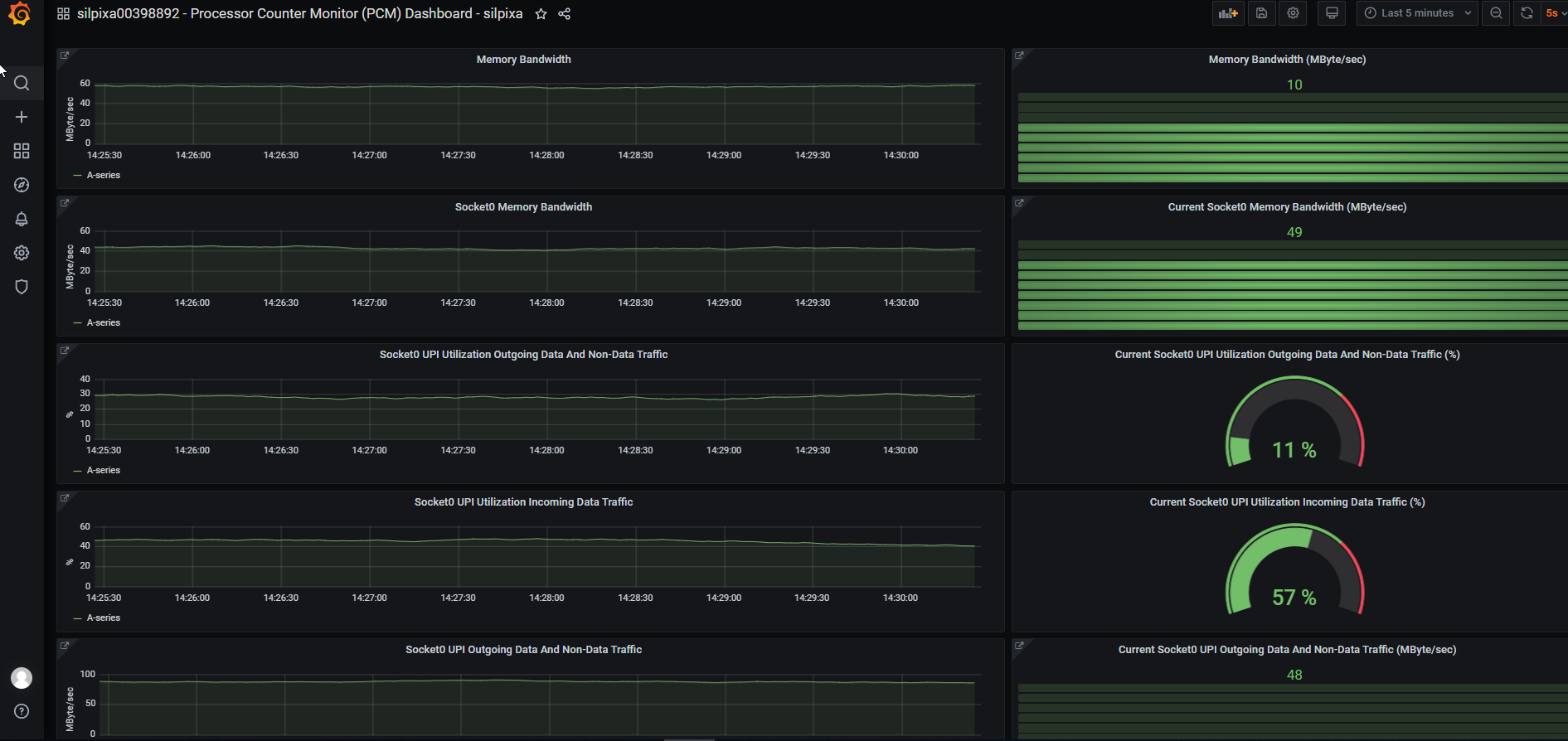

PCM

Processor Counter Monitor (PCM) is an application programming interface (API) and a set of tools based on the API to monitor performance and energy metrics of Intel® Core™, Xeon®, Atom™ and Xeon Phi™ processors. In Smart Edge Open, the PCM pod is deployed as a K8s Daemonset on every available node. PCM metrics are exposed to Prometheus via the Host’s NodePort on each EdgeNode.

NOTE: The PCM feature is intended to run on physical hardware (i.e., no support for VM virtualized Edge Nodes in Smart Edge Open). Therefore, this feature is disabled by default. The feature can be enabled by setting the

telemetry_pcm_enableflag in CEEK. Additionally, a preset dashboard is created for PCM in Grafana visualizing the most crucial metrics. NOTE: There is currently a limitation in Smart Edge Open where a conflict between deployment of CollectD and PCM prevents PCM server from starting successfully, it is advised to run PCM with CollectD disabled at this time.

Usage

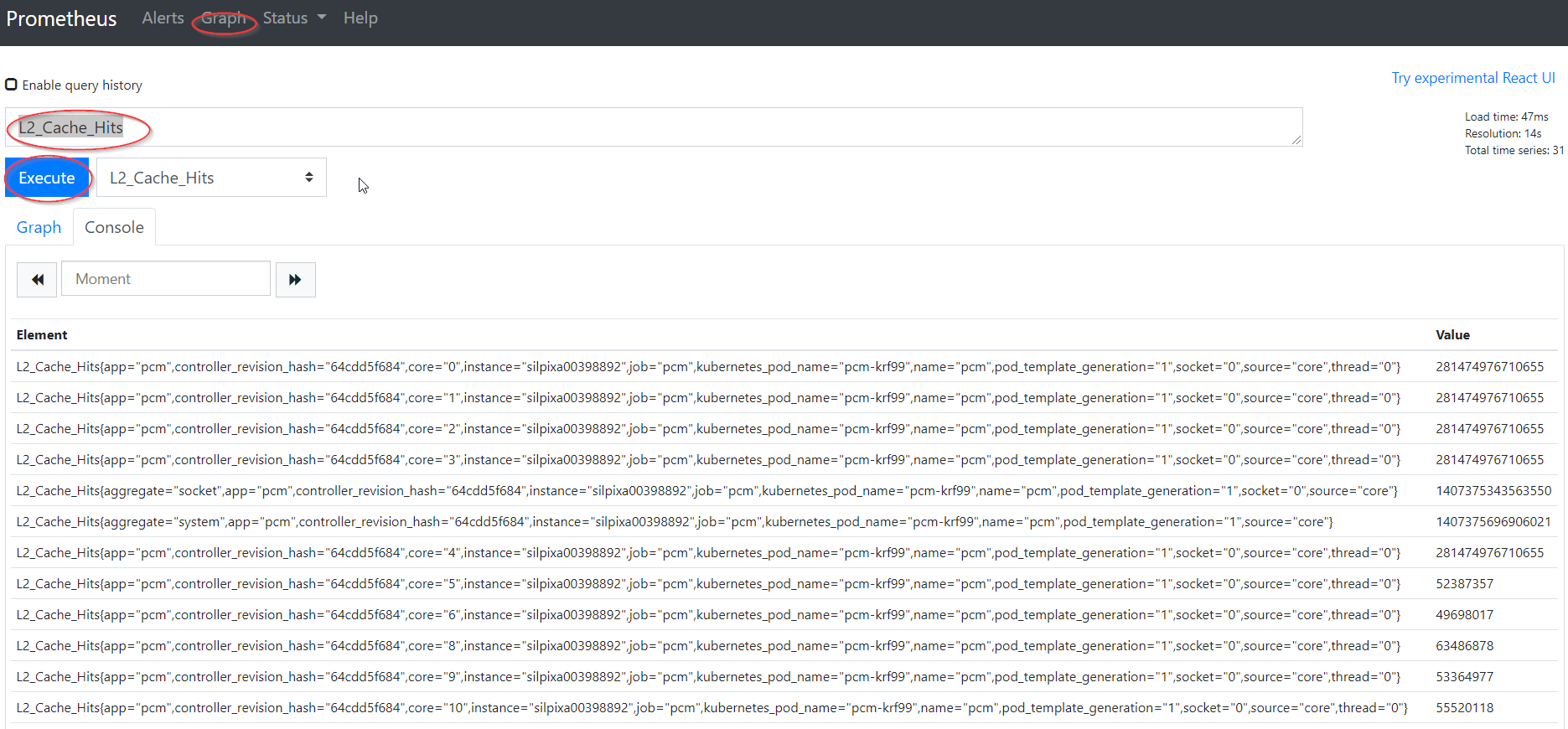

- To access metrics available from PCM, connect to Prometheus dashboard.

- Look up an example PCM metric by specifying the metric name (ie.

L2_Cache_Hits) and pressingexecuteunder thegraphtab.

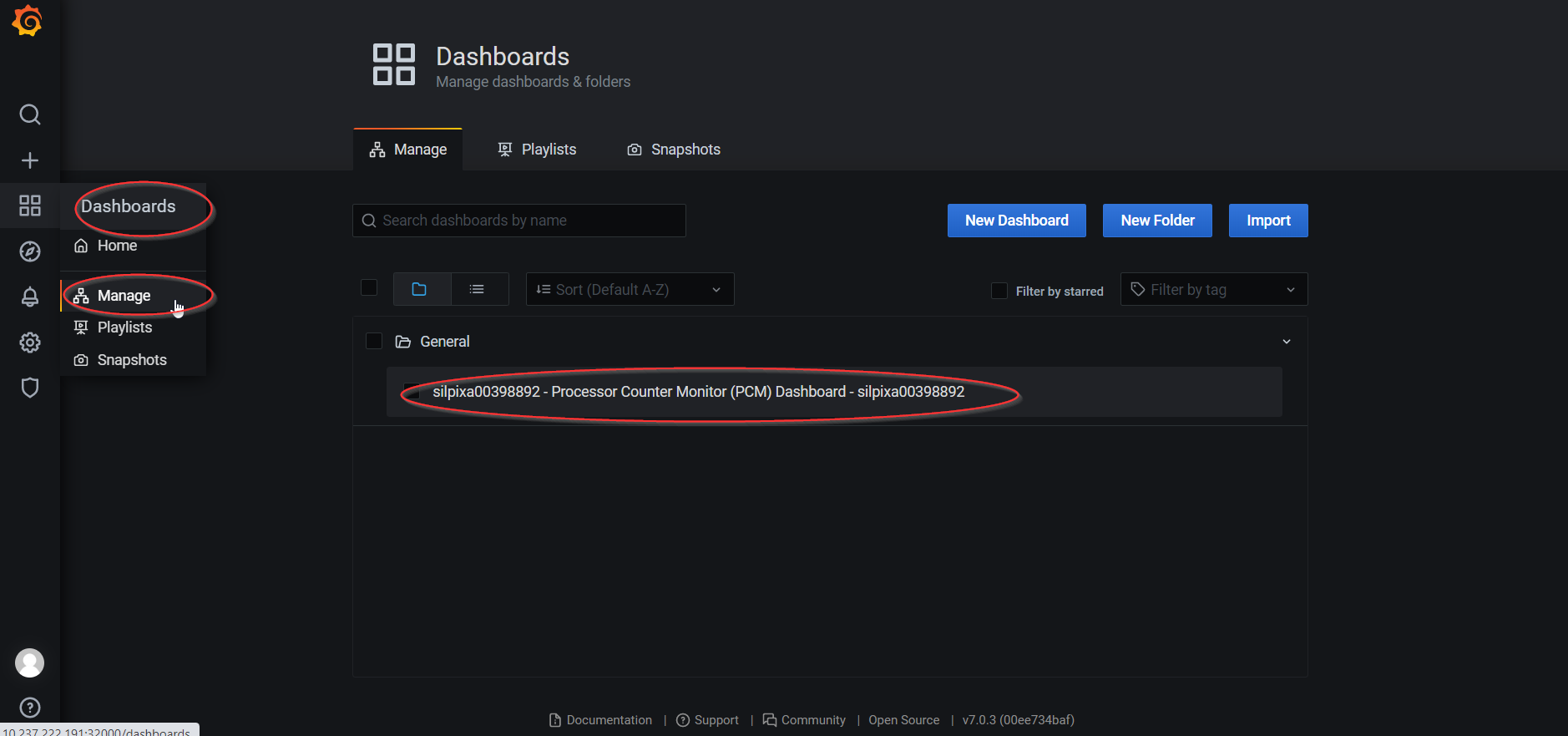

- Log into Grafana.

- Select the dashboard to visualize metrics from the desired node and click on it.

- Metrics are visualized.

TAS

Telemetry Aware Scheduler enables the user to make K8s scheduling decisions based on the metrics available from telemetry. This is crucial for a variety of Edge use-cases and workloads where it is critical that the workloads are balanced and deployed on the best suitable node based on hardware ability and performance. The user can create a set of policies defining the rules to which pod placement must adhere. Functionality to de-schedule pods from given nodes if a rule is violated is also provided. TAS consists of a TAS Extender which is an extension to the K8s scheduler. It correlates the scheduling policies with deployment strategies and returns decisions to the K8s Scheduler. It also consists of a TAS Controller that consumes TAS policies and makes them locally available to TAS components. A metrics pipeline that exposes metrics to a K8s API must be established for TAS to be able to read in the metrics. In Smart Edge Open, the metrics pipeline consists of:

- Prometheus: responsible for collecting and providing metrics.

- Prometheus Adapter: exposes the metrics from Prometheus to a K8s API and is configured to provide metrics from Node Exporter and CollectD collectors. TAS is enabled by default in CEEK, a sample scheduling policy for TAS is provided for VCAC-A node deployment.

Usage

- To check the status of the TAS Extender and Controller, check the logs of the respective containers.

$ kubectl get pods | grep telemetry-aware-scheduling #Finds name of TAS pod telemetry-aware-scheduling-75596fd6b4-4qjvm 2/2 Running 2 5d3h $ kubectl logs <TAS POD name> -c tascont #Logs for Controller 2020/05/23 15:11:47 Watching Telemetry Policies $ kubectl logs <TAS POD name> -c tasext # Logs for Extender 2020/05/23 15:11:47 Extender Now Listening on HTTPS 9001 - To check metrics available from the K8s API (a non-empty output expected), run:

kubectl get --raw /apis/custom.metrics.k8s.io/v1beta1 | jq . - To define a scheduling policy, create a

TASPolicydefinition file and deploy it by runningkubectl apply -f <Policy file name>. The following is an example for a VCAC-A node:apiVersion: telemetry.intel.com/v1alpha1 kind: TASPolicy metadata: name: vca-vpu-policy namespace: default spec: strategies: deschedule: rules: - metricname: vpu_device_thermal operator: GreaterThan target: 60 dontschedule: rules: - metricname: vpu_device_memory operator: GreaterThan target: 50 - metricname: vpu_device_utilization operator: GreaterThan target: 50 scheduleonmetric: rules: - metricname: vpu_device_utilization operator: LessThan - To link a created policy to a workload, add the required label (

telemetry-policy), resources (telemetry/scheduling: 1), and node affinity (affinity) to the workload’s specification file and deploy the workload.apiVersion: batch/v1 kind: Job metadata: name: intelvpu-demo-tas labels: app: intelvpu-demo-tas spec: template: metadata: labels: jobgroup: intelvpu-demo-tas telemetry-policy: vpu-policy spec: restartPolicy: Never containers: - name: intelvpu-demo-job-2 image: ubuntu-demo-openvino imagePullPolicy: IfNotPresent command: [ "/do_classification.sh" ] resources: limits: vpu.intel.com/hddl: 1 telemetry/scheduling: 1 affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: vpu-policy operator: NotIn values: - violating

Summary

The Smart Edge Open platform provides a comprehensive set of features and components that enable users to collect, monitor, and visualize a variety of platform metrics with a strong focus on telemetry from Intel® architecture (hardware such as CPU, VCAC-A, and FPGA). It also provides the means to make intelligent scheduling decisions for performance-sensitive Edge workloads based on the performance and abilities of the hardware. The support for collecting and visualizing metrics from within applications is also provided.

The telemetry component deployment is modular (each component running in its own pod) and easily configurable, meaning that support for other telemetry collectors is possible in the future. Currently, only support for metrics telemetry is provided but support for logging and tracing telemetry/tools for applications is planned for future releases.